State of Devops — 2024

Since DevOps was born we’ve had git, pipelines, containers, serverless infrastructure, social coding and AI. Where does that leave DevOps today?🤷♀️

Our DORA — not theirs

When using the phrase “State of DevOps” we are of course referring to DORA.

But even though the EU Digital Operational Resilience Act is getting a lot of attention these days. It’s not the DORA we’re referring to. In the DevOps community and culture, we have known DORA for exactly 10 years now — as something else.

Ours: DevOps Research and Assessment Institute (DORA)

Theirs: Digital Operational Resilience Act (DORA)

Since 2014 The DevOps Research and Assessment institute (DORA) has released their annual report on “State of DevOps”. The report is based on replies from more than 39K respondents, all of which are professionals working in software. The report is essentially a scavenger hunt in all this data. Looking for insights and trends and based on correlations in data the report setup hypotheses, triangulated with qualitative interviews, for what is happening in the world right now — The World of DevOps at least.

This year’s report is 120 pages. Perhaps a bit much for most. But we’re DevOps nerds, so each year we wait — full of suspense — for the report to be released in late october. So of course we read it. And this article is where we give you a curated highlight of the most interesting highlights.

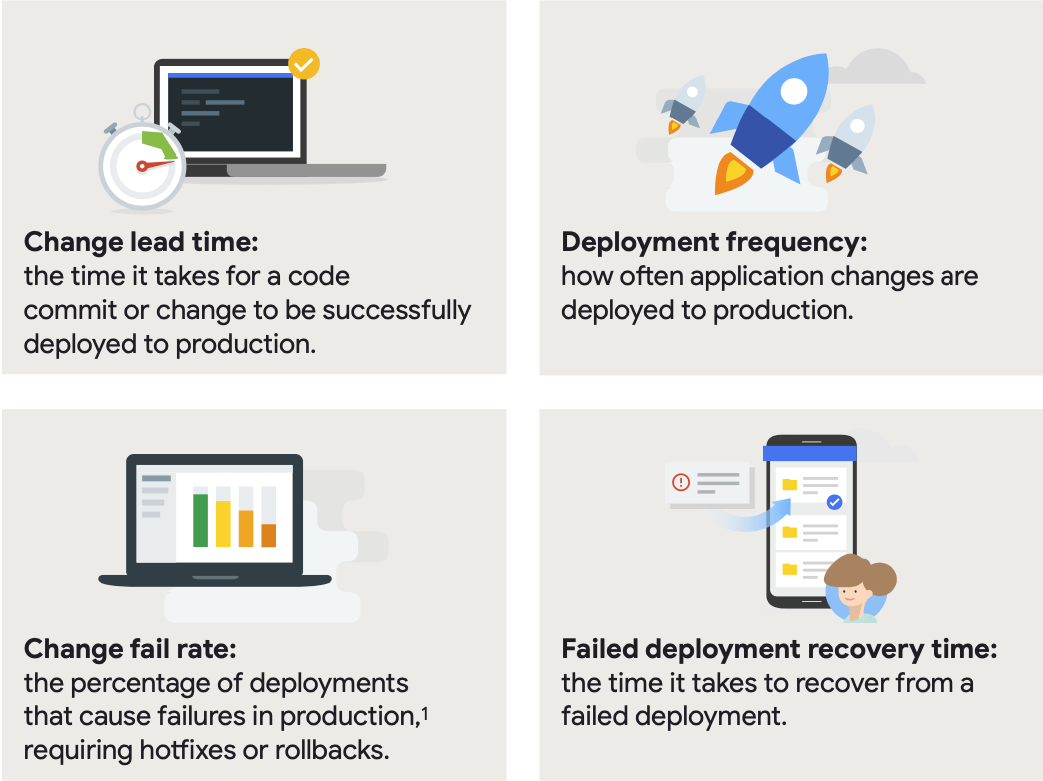

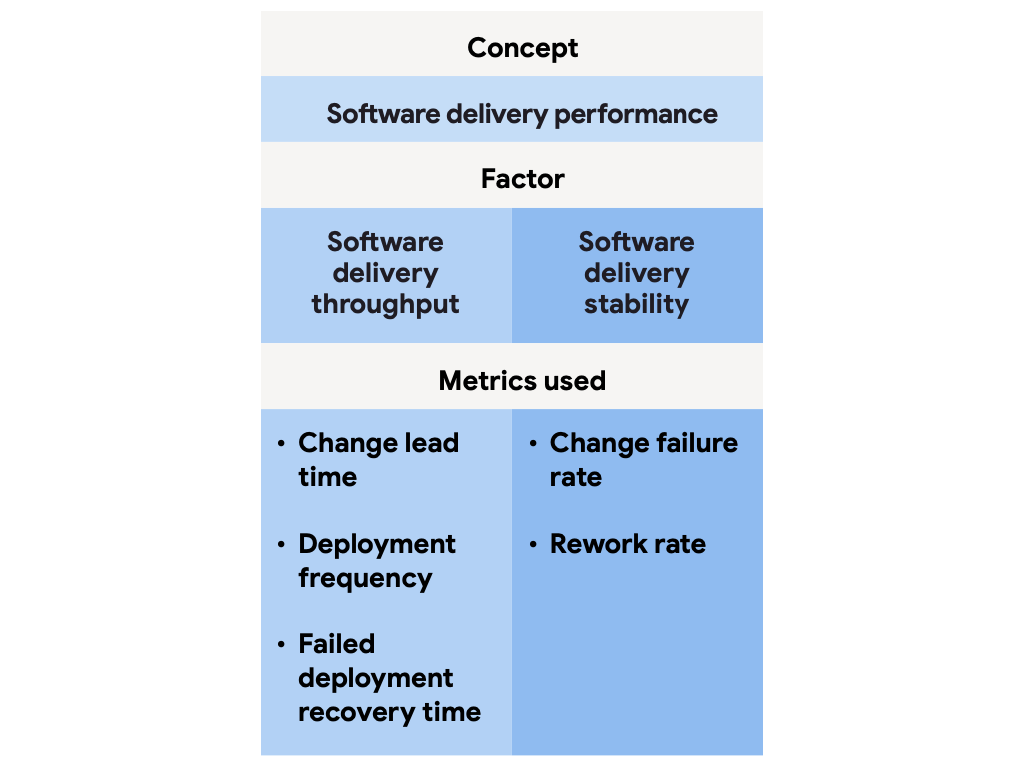

DORA basics

We have build and complied a deck of one-pagers explaining the DORA basics for newcomers. If you — like us — sit up and wait for the release of the report, then this 1st is probably too basic for you. If that’s the case, skip it and fast forward onto this year’s report findings.

Contemporary DevOps — 2024

Spoiler alert: Before we dive into the deck revealing the finer details of the anniversary report’s findings I’ll lay it out plainly: The — by far — hottest DevOps buzzwords in 2024 are still:

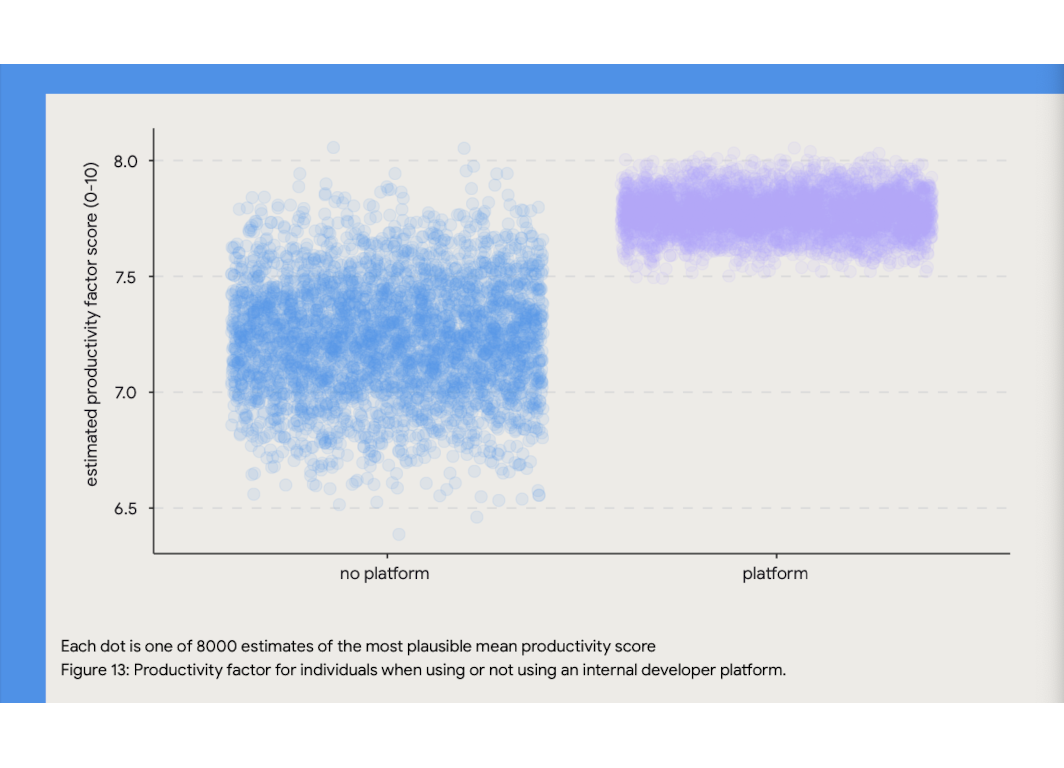

- PE: Platform Engineering

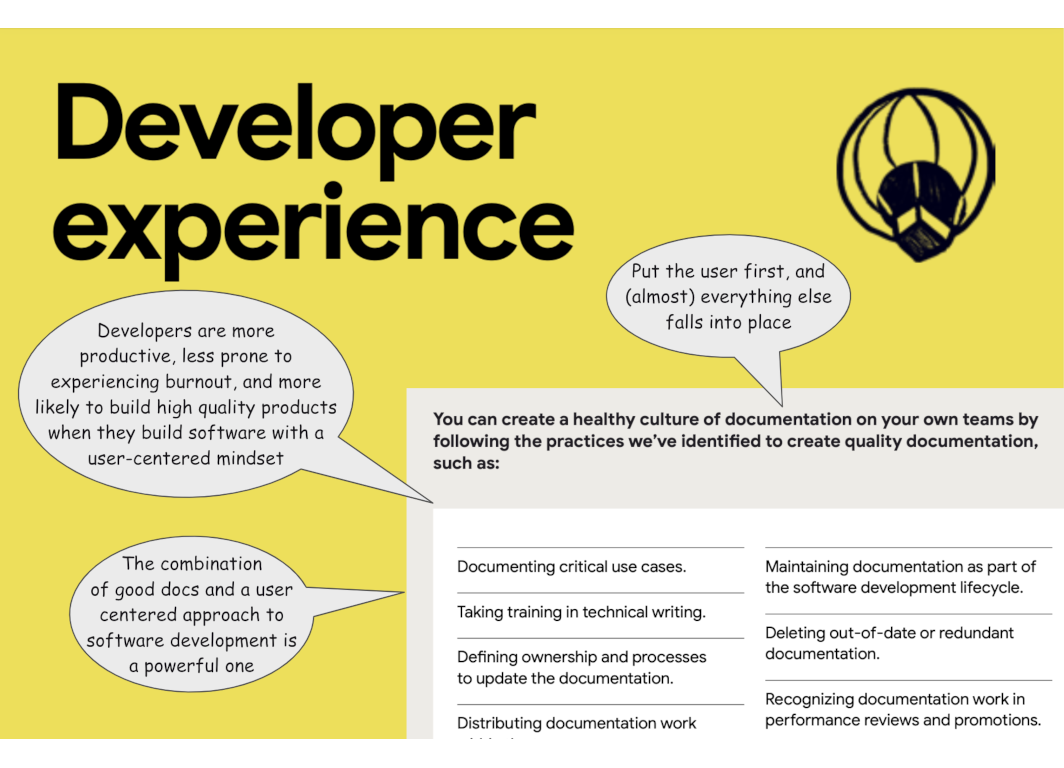

- DevX: Developer Experience

- IDP: Internal Development Platform

If any of these concepts are not yet part of your team’s daily vocabulary — then rest assured that before next years report they will be. Or learn to live with the fact that…

“the future is already here – it’s just not evenly distributed”1

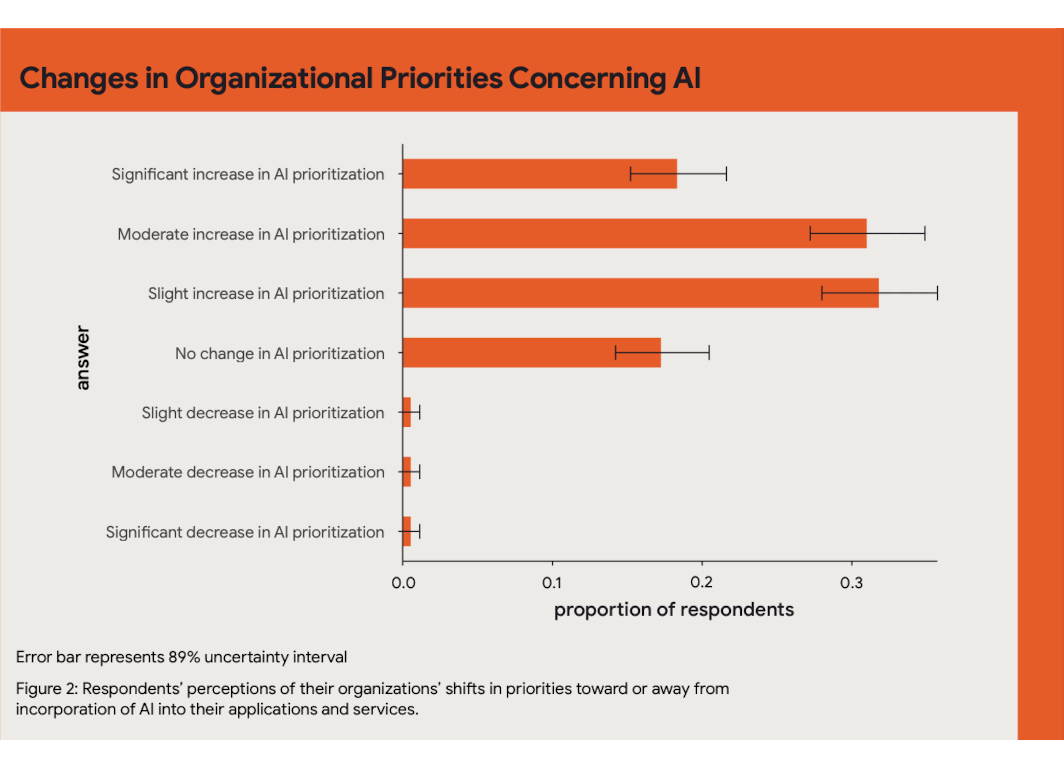

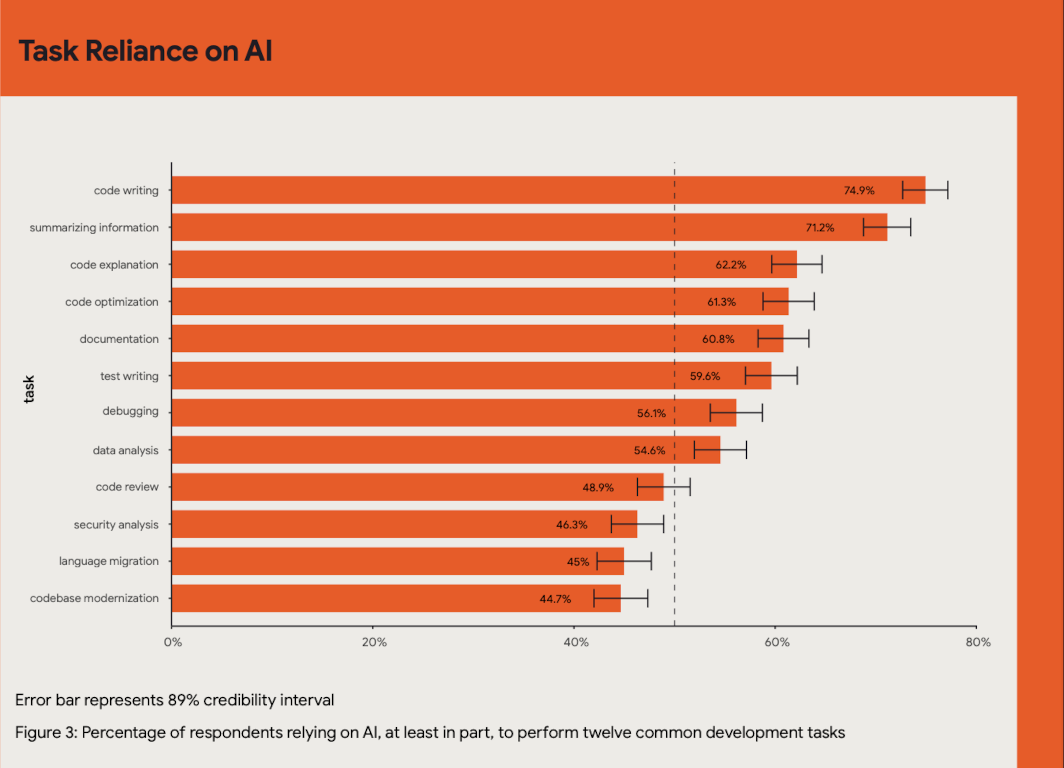

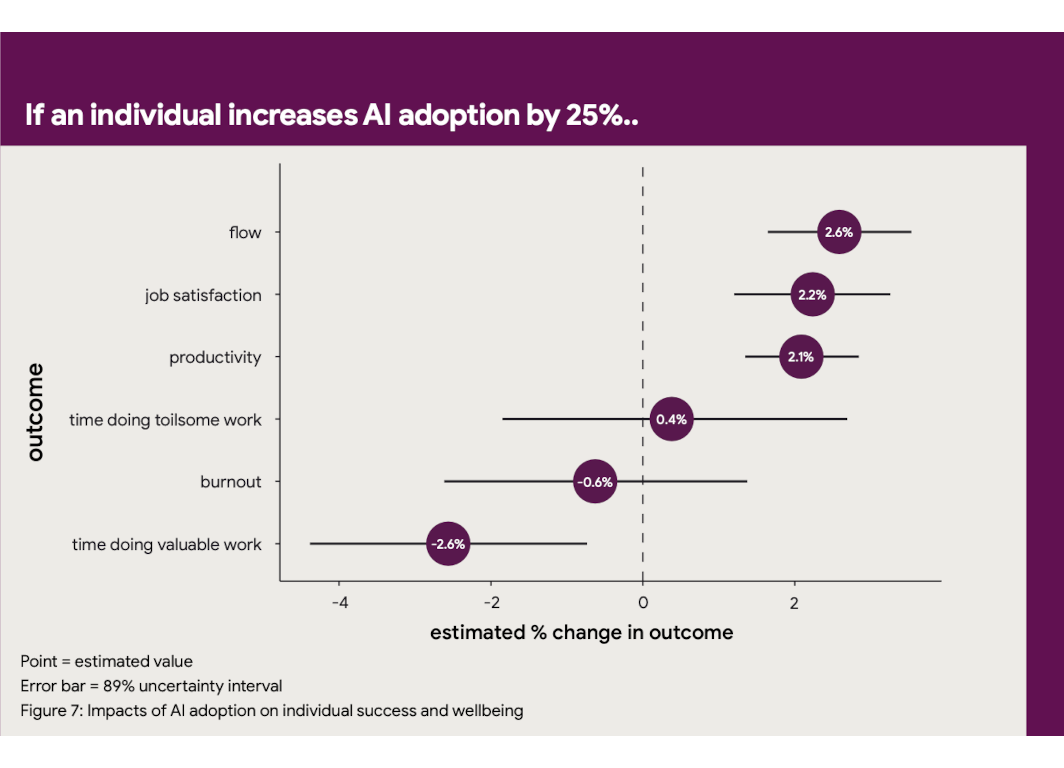

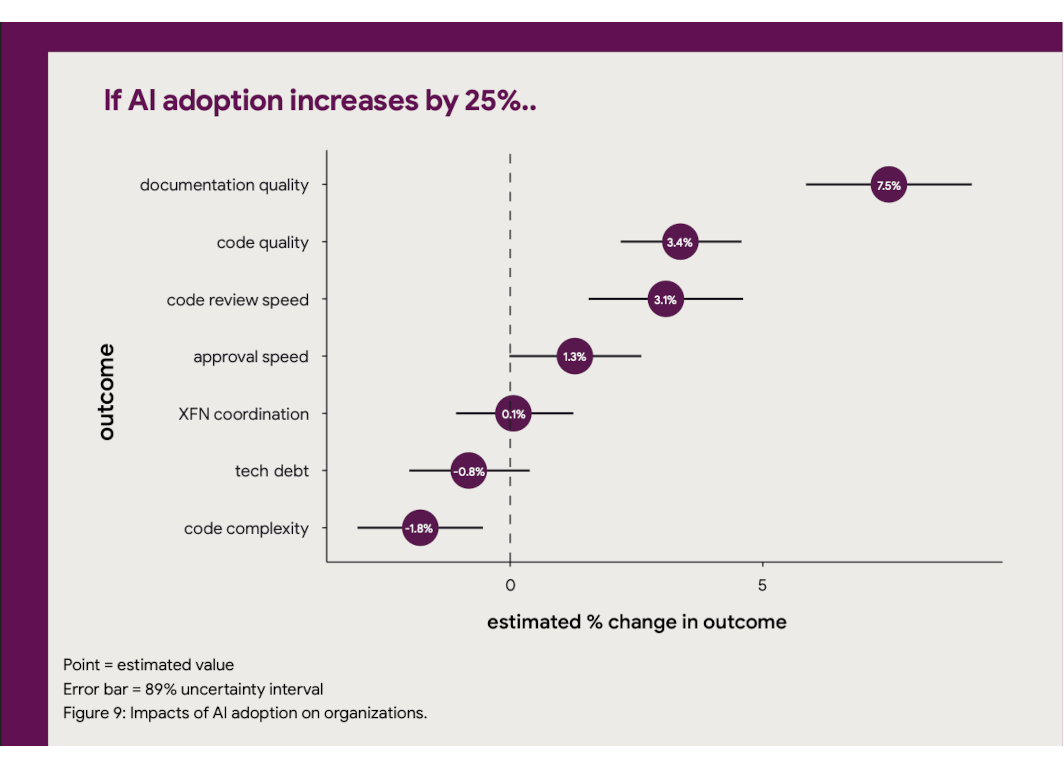

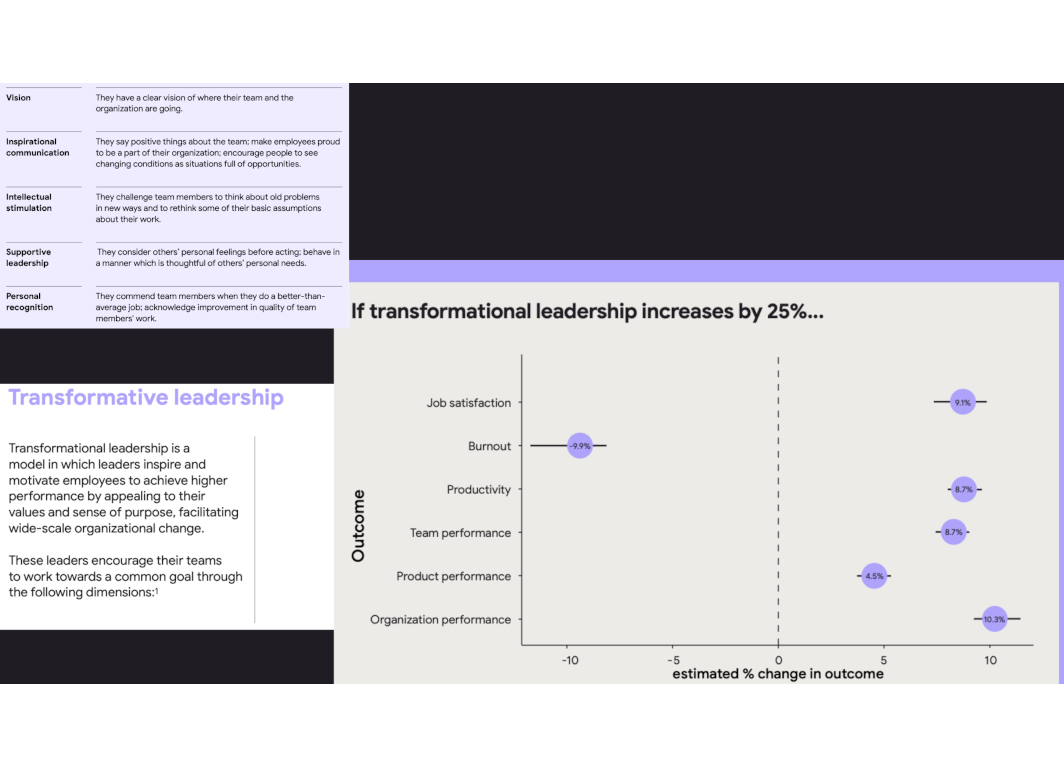

AI adoption and impact

But the report’s most jaw dropping claims are in the realm of AI. The speed of its adoption in software development and it’s impact on factors as far apart as job satisfaction, review processes and code quality. But also it’s apparently negative impact on software delivery performance.

IDP, DevX, PE, …and all that jazz

DORA refers another survey revealing that on average, respondents were willing to relinquish 23% of their entire future earnings if it meant they could have a job that was always meaningful.

DORA also references the 2023 Developer Survey done by Stack Overflow. In that survey over 90,000 developers responded to the annual survey about how they learn and level up, which tools they’re using, and which ones they want. It’s a different report entirely and worth a separate details look but from the perspective of DevX - Designing the Developer Experience, it’s another cornucopia of information

How is all of this useful?

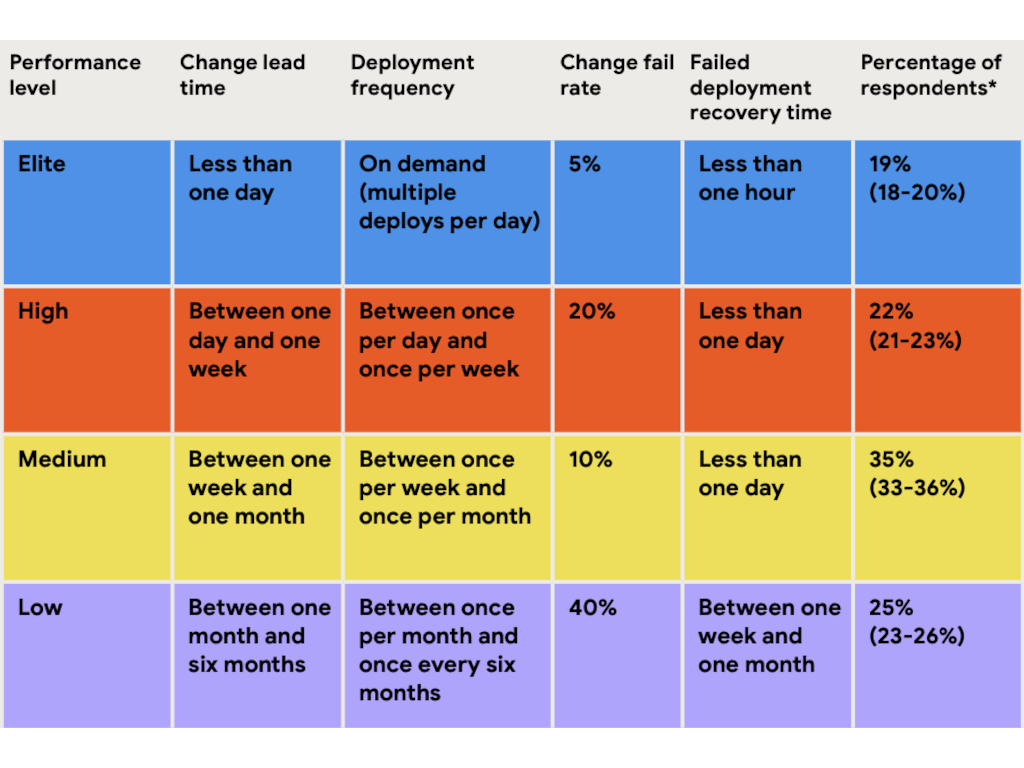

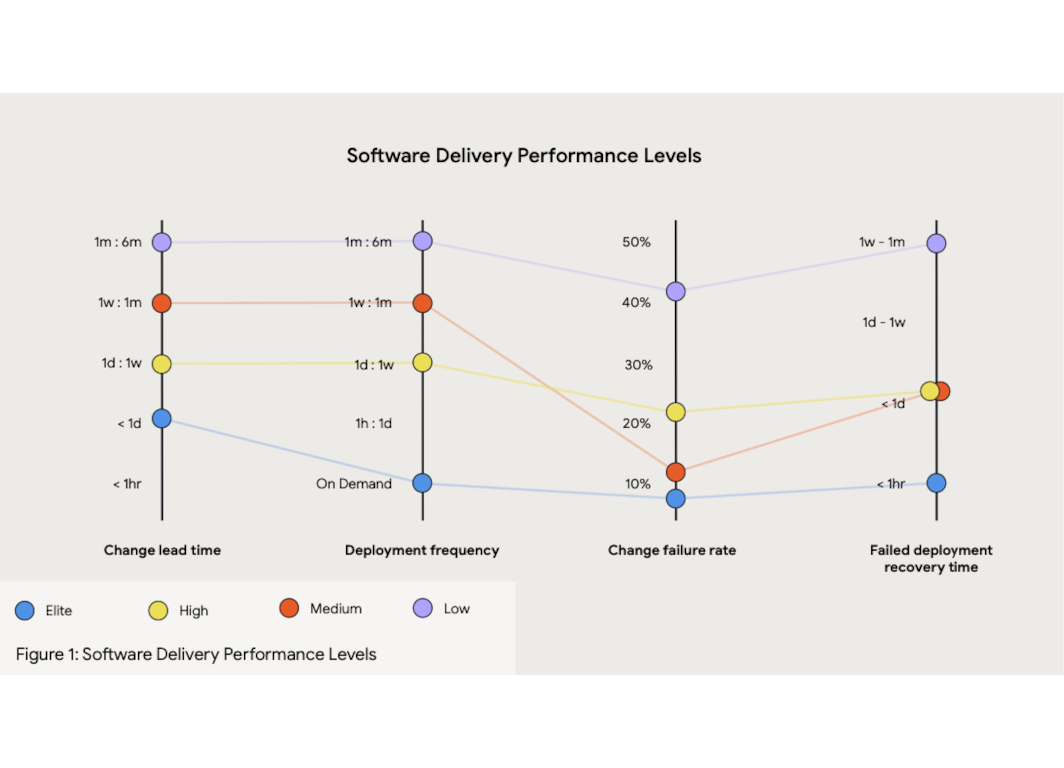

Recall the Elite performers vs. Low performers comparison?

Software is such a costly endeavor that any improvement you make in the Software Development Lifecycle that will improve either throughput, feedback from end-users, stability, overall quality or just fewer reworkings on the code base will become a huge financial gain. And in the same process keep your developers more likely to not jump ship, but rather stay employed with you.

Imagine

A process where a developer can not finish a task until a colleague has found the time to stop whatever they are doing themselves and then read through every line af code change and act as an — often arbitrary – quality gate of some change that no end-user ever requested, but some product owner insist is urgent. So urgent that it is added on top of all other work and demand it finished, even at the expense of overtime. So that it can become part of the manual test that is run 2 months prior to a planned release.

Imagine the developers mood when she, 3 months later receives a test failure report from the User Acceptance Test, saying the code is wrong, the feature is misunderstood and now starts a new similar cycle.

This could be a relatively typical low performer workflow.

Compared this to

A process of one of the elite performers, where the developers always work in pairs, and always in close collaboration with the end-users, where they have unlimited access to production-like environments and therefore have the power of doing functional end-to-end tests automatically and herby guarantee that quality is always build in.

They work by a playful trial and error process until the end-user smiles and cries “Yes - I love it!”. Then the code is populated through an automated pipeline which verifies the quality and it is automatically released into production, maybe as a canary deploy to only a subset of users, or perhaps with a feature toggle making it available only to a specific customer segment.

Over the next couple of days data is collected on how the features is being used, and experiments are done on making it available to more and more users either by volume or segments.

In the meantime the developers and end-users are already onto the next exciting experiment.

A scavenger hunt for that desired end-user cry out. “Yes - I love it!”

![]()

If you are in any need of inspiration or practical help with beefing up your SDLC with some DevX, IDP, PE, AI, Prompt engineering initiatives - or any other good old DevOpsy concepts such as containerization, Infrastructure as Code, declarative pipelines, Semantic Versioning or the like. Then feel free to call upon us and invite os for a coffee and an inspirational insight into what contemporary Devops can do for you!

-

Quote: William Gibson, Sci-fi writer and essayist. ↩